Hi, i want to save each iteration response in CSV File along with the CSV File that i gave as a input to know that which input data have which response but when i am executing the project for 3 three iteration it save three iteration data but in CSV File it just shows the third iteration data three times so please if anyone know something i need help.

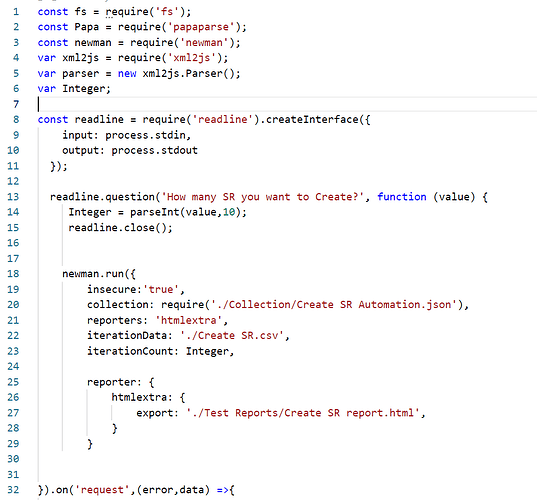

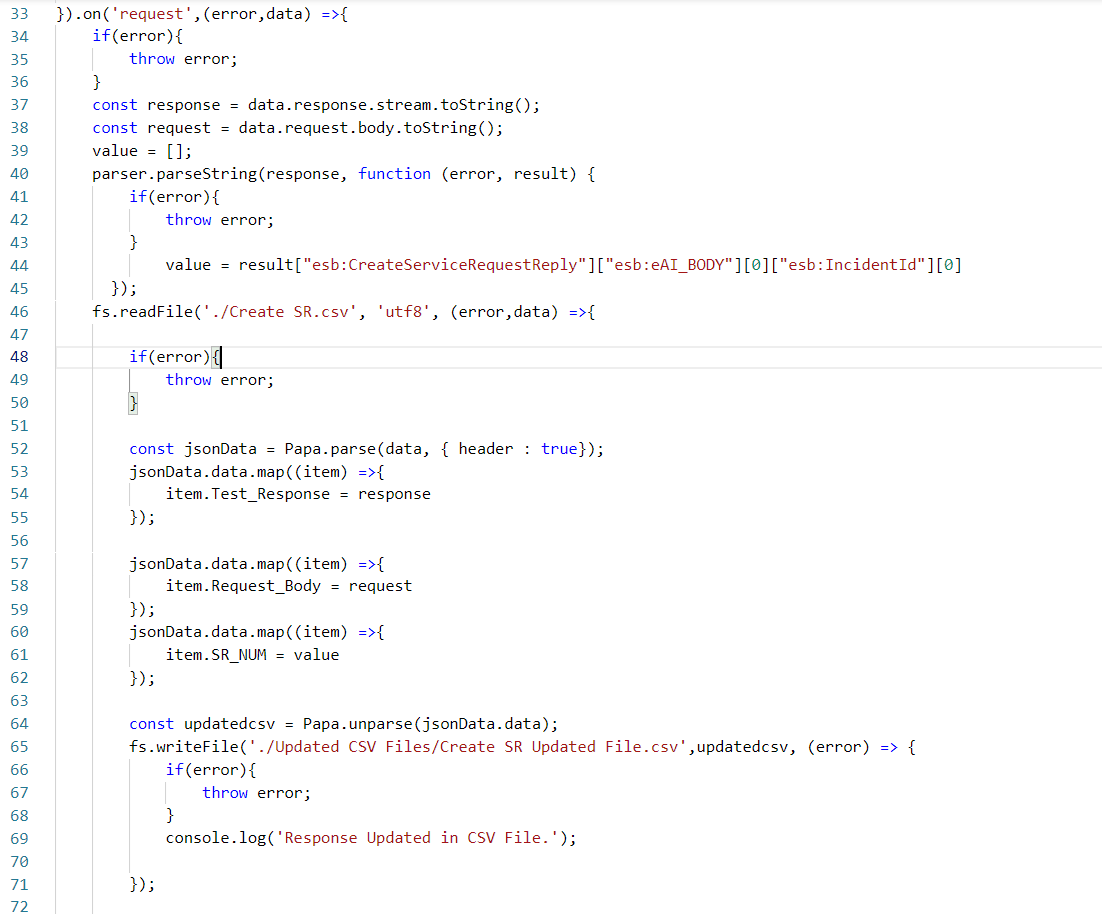

Code screenshot are attached below

Hello there,

I think you can’t write on a disk from the postman.

If you want to write on a disk:

First, create a local server and make a post request to it using pm.sendRequest.

Please see this post for more details.

I hope this will help you.

If you have any other doubt, feel free to ask.

Best Regards,

Rishi Purwar

As it’s using Newman as a library to run the Collection via a script, you can print anything you like to a file, using a specific set of node modules

My Bad! Thanks for pointing out.

I appreciate your help.

Yes as you can see i am using newman as a library and try to print response in csv file and for that i am using papa parse library but the issue i am facing are newman run its iteration but in csv just save the last response so please guide me how can i save each response in separate line in csv

Could you update the post with the raw code, rather than an image of it - makes it easier to debug that locally.

Also, an example of the response body.

Please find the raw form of code and as well the example of response

const fs = require('fs');

const Papa = require('papaparse');

const newman = require('newman');

var xml2js = require('xml2js');

var parser = new xml2js.Parser();

var Integer;

const readline = require('readline').createInterface({

input: process.stdin,

output: process.stdout

});

readline.question('How many SR you want to Create?', function (value) {

Integer = parseInt(value,10);

readline.close();

newman.run({

insecure:'true',

collection: require('./Collection/Create SR Automation.json'),

reporters: 'htmlextra',

iterationCount: Integer,

reporter: {

htmlextra: {

export: './Test Reports/Create SR report.html',

}

}

}).on('request',(error,data) =>{

if(error){

throw error;

}

const response = data.response.stream.toString();

const request = data.request.body.toString();

value = [];

parser.parseString(response, function (error, result) {

if(error){

throw error;

}

value = result["esb:CreateServiceRequestReply"]["esb:eAI_BODY"][0]["esb:IncidentId"][0]

});

fs.readFile('./Updated CSV Files/Create SR Updated File.csv', 'utf8', (error,data) =>{

if(error){

throw error;

}

const jsonData = Papa.parse(data, { header : true});

jsonData.data.map((item) =>{

item.Test_Response = response

});

jsonData.data.map((item) =>{

item.Request_Body = request

});

jsonData.data.map((item) =>{

item.SR_NUM = value

});

const updatedcsv = Papa.unparse(jsonData.data);

fs.writeFile('./Updated CSV Files/Create SR Updated File.csv',updatedcsv, (error) => {

if(error){

throw error;

}

console.log('Response Updated in CSV File.');

});

});

}).on('beforeDone',(error,data) => {

if(error){

throw error;

}

const findFailures = (a,c) => {

return a && (c.error === null || c.error === undefined)

}

const testResults = data.summary.run.executions.reduce((a,c) =>{

if(a[c.cursor.iteration] !== 'FAILED'){

a[c.cursor.iteration] = c.assertions.reduce(findFailures,true) ? 'PASSED' : 'FAILED';

}

return a;

}, []);

fs.readFile('./Updated CSV Files/Create SR Updated File.csv', 'utf8', (error,data) =>{

if(error){

throw error;

}

const jsonData = Papa.parse(data, { header : true});

jsonData.data.map((item,index) => {

item.Test_Status = testResults[index];

});

const updatedcsv = Papa.unparse(jsonData.data)

fs.writeFile('./Updated CSV Files/Create SR Updated File.csv',updatedcsv, (error) => {

if(error){

throw error;

}

console.log('Status Updated in CSV File.');

});

});

});

});

and Response body look like this

<?xml version=""1.0"" encoding=""UTF-8""?>

<esb:CreateServiceRequestReply xmlns:esb=""esb.ashghal.gov.qa"">

<esb:eAI_HEADER>

<esb:referenceNum>a</esb:referenceNum>

<esb:clientChannel>DSS</esb:clientChannel>

<esb:requestTime>2021-01-18T14:53:28Z</esb:requestTime>

<esb:retryFlag>N</esb:retryFlag>

<esb:sucessfulReversalFlag>N</esb:sucessfulReversalFlag>

</esb:eAI_HEADER>

<esb:eAI_BODY>

<esb:IncidentId>10091567</esb:IncidentId>

<esb:IncidentUID>109498</esb:IncidentUID>

</esb:eAI_BODY>

<esb:eAI_STATUS>

<esb:returnStatus>

<esb:returnCode>000000</esb:returnCode>

<esb:returnCodeDesc>Service Success</esb:returnCodeDesc>

</esb:returnStatus>

</esb:eAI_STATUS>

</esb:CreateServiceRequestReply>

I hope now its clear and you have better understanding of my code and problem so please if you have any solution i am waiting for your response. Thank You in advance

Hey @ahtashamali06,

I think you’re overriding the values you sent with the following snippet:

eg. you iterate over all rows in run 1, and add 3 columns to each (test_response, request_body, sr_num) with values. When you are in run 2, you iterate over all rows again(this might include the previous rows as well), again you overwrite the same columns with different values.

I’m not sure if your end result involves adding columns for every row in the csv or adding rows for each iteration. Because if you parse a csv, and instead of appending to jsonData.data (an array) if you add new attributes(SR_NUM) it would result in adding columns to the csv.

Either way, my hunch is the values are being overwritten. If you’d like to avoid that, then you might need to come up with new column names for each iteration or make changes to the format.

I’m guessing though, let me know if this helped/helps ![]()